Multiagent decision processes:

Formal models for multiagent decision making

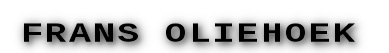

Multiagent systems are complex: they have complex interactions and can live in complex environments. In order to make any sense of them, much of my research focusses on formal models for multiagent decision making. These models include various extensions of the Markov decision process (MDP), such as multiagent MDPs (MMDPs), decentralized MDPs (Dec-MDPs), decentralized partially observable MDPs (Dec-POMDPs), partially observable stochastic games (POSGs), etc. I refer to the collection of such models as multiagent decision processes (MADPs).

Pointers:

- The Dec-POMDP book tries to give a clear explanation of the landscape of MADPs.

- The MADP Software toolbox implements many MADPs and some of their solution methods.

Decentralized POMDPs

In particular, much of my research addresses the framework of decentralized POMDPs (Dec-POMDPs). A Dec-POMDP models a team of cooperative agents situated in an environment that they can only observe partially (e.g., due to limited sensors). To add to the complexity, each team member can only base its actions on its own observations.

Learning about Dec-POMDPs

If you want to learn about Dec-POMDPs, a good place to start might be these:

- The book that Chris Amato and I wrote. Check it out here or at Springer.

- Shorter, but also denser, is the book chapter that I wrote.

Alternatively, you may want to have a look at the first two chapters of my PhD Thesis, or check out the survey article by Seuken & Zilberstein (2008, JAAMAS).

See also:

- Some of the lectures I give, at teaching materials